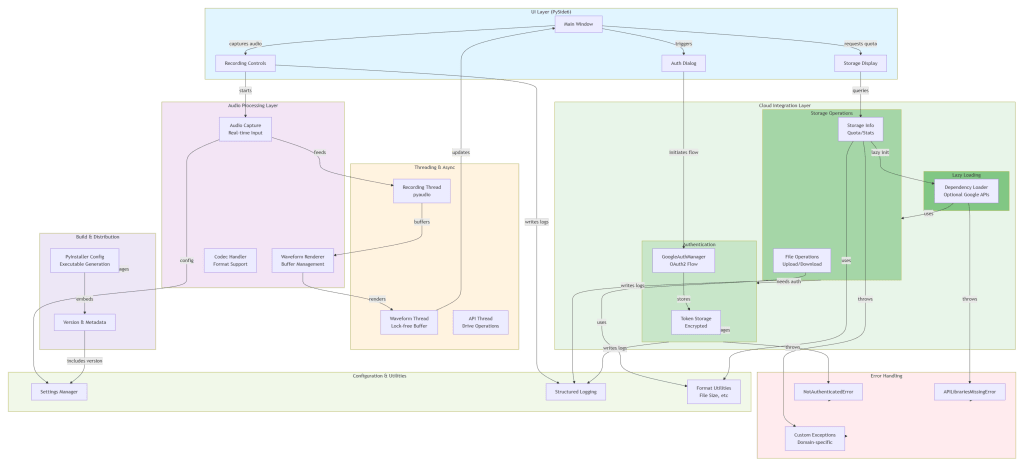

A Solutions Architect’s Deep Dive into Component-Based Design, Cloud Integration, and the Reality of “Minimal but Resilient”

The Evolution: From Minimal Vision to Layered Reality

The original post captured the aspiration: balance “small” with “production-grade.” Six months and several architectural refinements later, I can now articulate what that balance actually looks like when you’re knee-deep in real implementation decisions.

Voice Recorder Pro hasn’t grown in scope — it’s grown in thoughtfulness. That distinction matters, because it separates a polished MVP from a fragile one that looks polished until it doesn’t.

Technical Insights from Implementation Reality

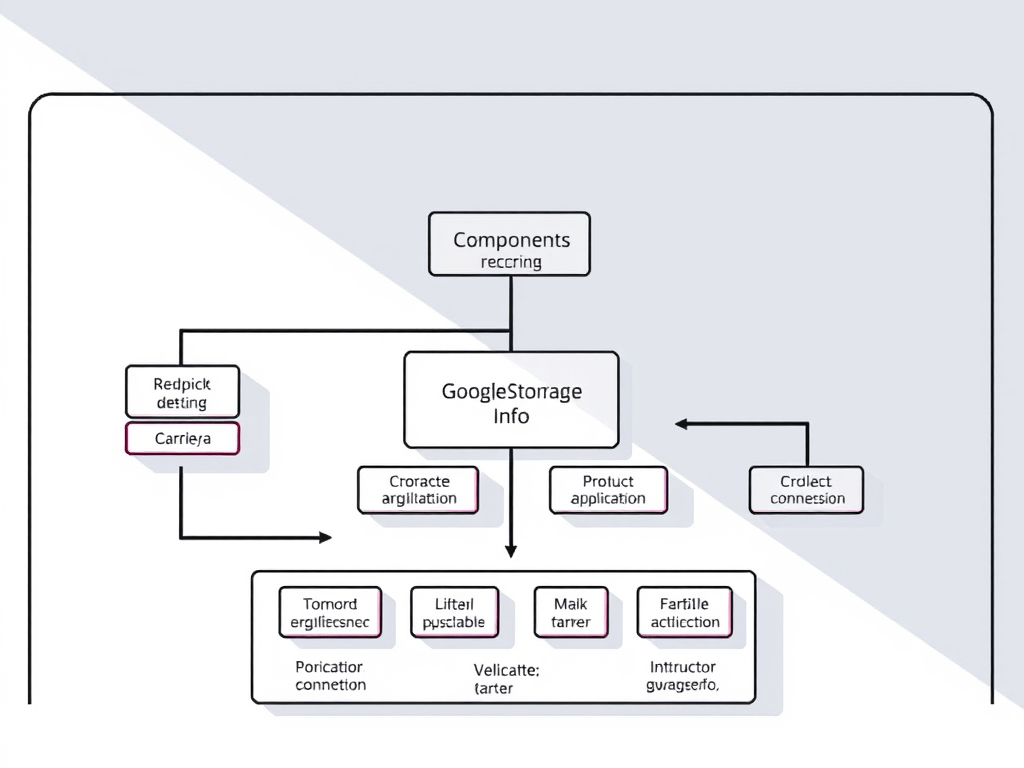

1. Component-Based Architecture Beats Monolithic “Simplicity”

What Changed:

Initially, the Drive integration lived as part of a larger manager class. As we added storage quota retrieval, file operations, and authentication state management, that “simple” monolith became a pressure cooker for side effects.

The Refactor:

We extracted GoogleStorageInfo as a standalone component — not for the sake of modularity theater, but because it solved three real problems:

- Testability: We could mock authentication without mocking the entire Drive client

- Separation of Concerns: Storage quota logic doesn’t need to know about file upload buffering

- Reusability: Other modules could query storage without coupling to file operations

# This is what component separation actually looks like

class GoogleStorageInfo:

def __init__(self, auth_manager: Any, service_provider: Any = None):

self.auth_manager = auth_manager

self.service_provider = service_provider # Testability hook

self.service: Optional[Any] = None

Architect’s Reflection:

The temptation in minimal builds is to merge everything into one class to “reduce complexity.” The opposite is true: strategic separation reduces accidental complexity. The code is slightly longer, but the responsibility surface is smaller and clearer.

AI’s Role:

Copilot surfaced the Protocol abstraction pattern early, which clarified the contract between components without forcing implementation details upward.

2. Error Handling as Architecture, Not Afterthought

What Changed:

Early iterations handled exceptions generically. Once we added Google API versioning concerns and network resilience, generic handling became a liability.

try:

self.service = build(

"drive", "v3", credentials=credentials, cache_discovery=False

)

except TypeError:

# Fallback for older API versions that don't support cache_discovery

self.service = build("drive", "v3", credentials=credentials)

This isn’t error handling for its own sake — it’s architectural resilience. The Google API library evolved; our code evolved with it.

The Lesson:

In production desktop apps, your error handling is part of your UX contract. A cryptic exception crash versus a graceful fallback is the difference between “frustrating” and “professional.”

Custom Exceptions:

class NotAuthenticatedError(Exception):

"""Raised when user is not authenticated with Google."""

pass

class APILibrariesMissingError(Exception):

"""Raised when required Google API libraries are unavailable."""

pass

These aren’t ceremony — they’re the language your application speaks to its UI layer. When the UI catches NotAuthenticatedError, it knows exactly how to respond. Generic Exception tells it nothing.

AI’s Contribution:

Copilot suggested the explicit exception hierarchy and reminded me not to swallow exceptions silently — a junior instinct that even experienced devs sometimes fall into under time pressure.

3. Lazy Loading and Deferred Initialization: Production Necessity, Not Optimization Luxury

What Changed:

Early design initialized Google API clients on app startup. Fast machines didn’t notice the latency. Real user machines with slower networks did.

def _get_service(self) -> Any:

"""Get or create Google Drive service."""

if self.service_provider:

return self.service_provider # Testing escape hatch

# ... authentication checks ...

if not self.service:

# Lazy initialization happens here, only when needed

Why It Matters:

- Cold start time matters for user perception

- Not every session needs Drive access immediately

- Tests can inject mock services without triggering real initialization

Architect’s Perspective:

This is where “minimal” and “production” intersect. We could have initialized everything upfront (simpler code, measurably worse experience). Instead, we paid a small complexity cost for a noticeable user experience gain.

4. Cloud Integration: Layering Abstractions Without Over-Engineering

What Changed:

The _lazy module emerged as a pattern for handling optional dependencies:

def has_google_apis_available():

"""Check if Google API libraries are available."""

# Implementation details here

def import_build():

"""Lazily import the build function."""

# Only import when actually needed

Why This Matters for Minimal Builds:

Voice Recorder Pro can function offline. Google Drive integration is a feature, not a core requirement. By deferring the import of heavy Google API libraries, we:

- Reduce baseline memory footprint

- Avoid hard dependencies on Google’s libraries

- Allow graceful degradation if the user doesn’t have them installed

The Reality Check:

Some might argue this adds complexity. In a truly minimal build, you’d just import googleapiclient at the top and accept the dependency. But “minimal” that breaks under missing libraries isn’t production-ready — it’s just small.

5. Logging as Observability, Not Debug Output

What Changed:

logger.error("Failed to initialize Drive service - missing libraries: %s", e)

logger.error("Storage quota error: %s", e)

These aren’t for developers troubleshooting locally. They’re for understanding what happened in a user’s environment after an issue is reported.

Why It Matters:

When a user says “I can’t access my recordings in Drive,” you need to know:

- Was it an authentication failure?

- A missing library?

- A network timeout?

- A quota limit?

Structured logging gives you that signal. Generic logging gives you noise.

AI’s Contribution:

Copilot kept me honest about logging specificity — not logging too much (noise) and not too little (mystery).

The Expanded AI Partnership Model

As a Production Readiness Auditor

Copilot flagged scenarios I’d glossed over: “What if the user has an old version of the Google API library?” That led to the cache_discovery fallback. Not groundbreaking, but the difference between “works on my machine” and “works for most users.”

As a Pattern Librarian

When implementing storage quota with percentage calculations and formatted output, Copilot surfaced the distinction between business logic (usedPercent) and presentation logic (format_file_size). Small separation, large clarity.

As a Dependency Analyst

“Have you considered what happens if this library isn’t installed?” — forcing the lazy-loading pattern and graceful degradation strategy.

What “Minimal but Production-Ready” Actually Means

After this iteration, here’s what we’ve crystallized:

| Aspect | Minimal ≠ | But Also ≠ | Actually Means |

|---|---|---|---|

| Code Volume | Omit features | Omit rigor | Every line earns its place |

| Dependencies | Hard-code everything | Bloat with abstraction | Strategic lazy-loading |

| Error Handling | Crash and burn | Swallow silently | Inform and recover |

| Logging | Debug dumps | Nothing | Actionable signals |

| Testing | Skip it | 100% coverage | Test failure paths |

The Uncomfortable Truth About Minimal Builds

Here’s what the original post didn’t quite say: minimal is harder than elaborate.

Building a 10-feature app with full error recovery is straightforward — you have surface area. Building a 3-feature app that survives all the ways those 3 features can fail? That requires discipline.

Voice Recorder Pro’s codebase is genuinely small. But every component — from the lazy importer to the custom exceptions to the Protocol abstractions — exists because it solved a real problem. That’s not accidental elegance; it’s architectural intention.

Closing: The Refinement Loop

The original post framed this as “Vision + Copilot = Production App.” True, but incomplete.

The fuller story is: Vision + Implementation Reality + Copilot Collaboration + Relentless Refinement = Production-Grade Minimal Build.

The refinement loop — where you discover that your “simple” architecture needs strategic complexity, where you realize that error handling isn’t overhead but contract enforcement, where you learn that lazy loading isn’t optimization but user empathy — that’s where AI’s real value emerges.

Copilot doesn’t replace this loop. It accelerates it, interrogates it, and sometimes redirects it toward patterns you wouldn’t have found in documentation.

That’s not autopilot. That’s partnership.