Tutorial · Post-mortem

TL;DR

- I built a compact sentiment-classifier project (training + predict) as a short learning exercise using Hugging Face Transformers, Datasets, and PyTorch.

- This post documents what we built, why, the errors we hit, how we fixed them, and a frank critique of the project with immediate next steps.

Motivation

We wanted a concise, reproducible exercise to practice fine-tuning transformer models and to document the common pitfalls newcomers (and sometimes veterans) face when building ML tooling. The goals were simple:

- Build a tiny pipeline that trains a binary sentiment classifier on IMDB (or a tiny sampled subset) and saves a best model.

- Make it easy to reproduce locally (Windows, small GPU), run smoke tests, and share learnings in a short blog post.

This repo is deliberately small and opinionated — it’s a learning artifact, not production ready. The value is in the problems encountered and how they were solved.

What we built

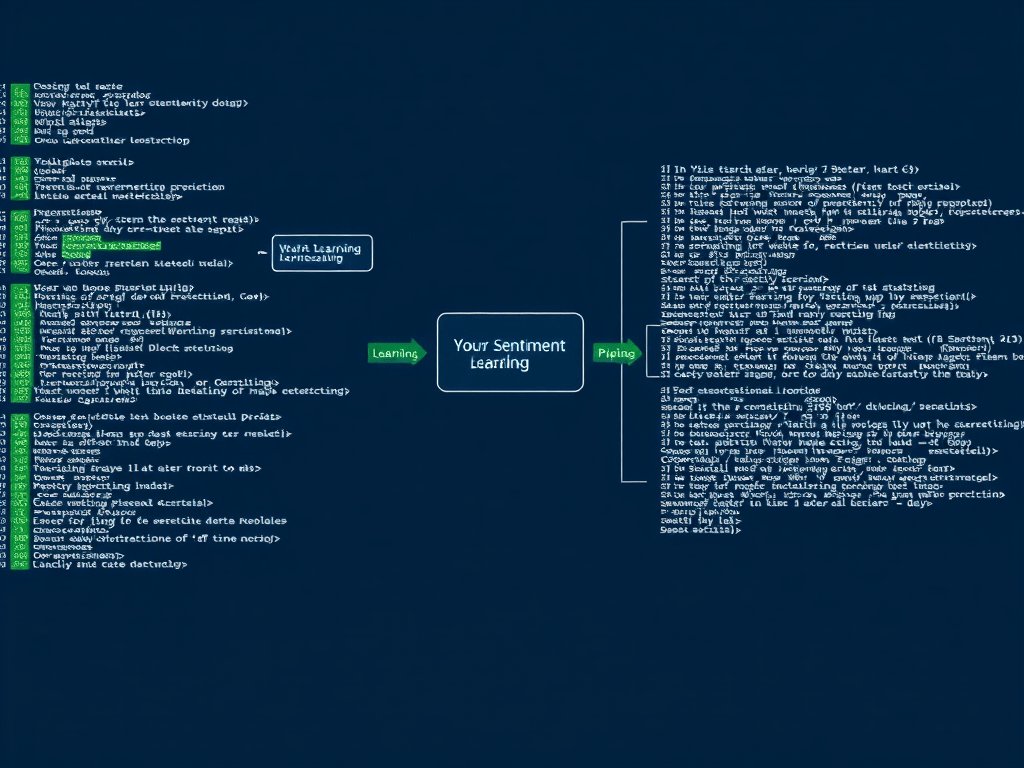

train.py— config-driven training script usingTransformers.Trainer.predict.py— loads the savedbestmodel and predicts a single text.config.yaml/dev_config.yaml— runtime configs;dev_config.yamlis minimized for fast smoke runs.tests/test_smoke.py— tiny pytest forward-pass test usingfrom_config()models (no downloads required)..gitignoreand project-level docs (this post).

Design decisions

- Use configs (YAML) for hyperparameters so we can run fast dev experiments and larger runs without code edits.

- Keep training code simple and readable rather than abstracted into many modules — easier for a small learning project.

Repro (quick)

Dev smoke run (PowerShell)

& "D:/Sentiment Classifier/.venv/Scripts/python.exe" "D:/Sentiment Classifier/sentiment-classifier/train.py" "D:/Sentiment Classifier/sentiment-classifier/dev_config.yaml"Run tests

cd "D:/Sentiment Classifier"

& ".venv/Scripts/python.exe" -m pytest -qWhat went wrong (real problems encountered)

- Missing evaluation dependency:

evaluateexpected scikit-learn for some metrics. Result: metrics import errors. - Transformers API mismatch: different versions of

TrainingArgumentsexpectevaluation_strategyvseval_strategy— passing the wrong kwarg crashed construction. - Save/eval strategy mismatch:

load_best_model_at_end=Truethrows a ValueError unlesssave_strategyequals the evaluation strategy. - Deprecated Trainer argument: older Trainer usages set

tokenizer=directly; docs recommendprocessing_class+data_collator=DataCollatorWithPadding(tokenizer). - YAML parsing quirks: bare

no/yesbecome booleans; this broke asave_strategyfield in dev configs. - Gigantic model files accidentally committed: pushing failed due to large

results/artifacts.

How we fixed them

- Install missing packages (scikit-learn) so

evaluatemetrics work. - Add robust code in

train.pyto detect whetherTrainingArguments.__init__acceptsevaluation_strategyoreval_strategyand pass the correct kwarg accordingly. - When

load_best_model_at_endis true, programmatically alignsave_strategywith the chosen evaluation strategy. - Replace deprecated

tokenizer=usage withprocessing_class=tokenizerandDataCollatorWithPadding. - Make small config values explicit strings (e.g.,

save_strategy: "no") to avoid YAML boolean parsing. - Remove large artifacts from git history: untrack

results/, add.gitignore, create a backup branch, then filter history and force-push the cleaned repo.

Aggressive critique (honest, sharp)

- Storing model artifacts in the repo — use Git LFS or object storage + download script.

- Monolithic

train.py— split into data/model/training/utils; add unit tests. - Weak config validation — enforce schema via Pydantic/JSON Schema; add

--validate-config. - Sparse logging/handling — add structured logs and guards around external calls.

- Minimal CI — GH Actions for pytest + lint (black/isort/flake8).

- No model packaging/versioning — add a tiny registry step + manifest.

- Security/privacy omitted — data intake checklist; pinned/ scanned dependencies.

Lessons learned

- Small smoke tests catch integration regressions fast.

- Prefer small dev configs; run full experiments separately.

- Transformer APIs evolve; add lightweight compatibility layers (or pin).

- Never commit large model artifacts to a Git repo.

- YAML quirks are real — validate configs.

Immediate next steps

- Add Git LFS or cloud storage for models.

- Add GitHub Actions for CI (pytest + linting).

- Refactor

train.pyinto modules with unit tests. - Add config validation and a contributor README.

Appendix — exact commands used (select)

Setup & deps

# create venv (if needed)

python -m venv .venv

& ".venv/Scripts/pip.exe" install -r requirements.txtDev smoke run

& ".venv/Scripts/python.exe" "train.py" "dev_config.yaml"Run tests

& ".venv/Scripts/python.exe" -m pytest -qClean git history

git rm -r --cached results

git add .gitignore

git commit -m "chore: remove model artifacts from repo (keep locally) and respect .gitignore"

git branch backup-with-results

git filter-branch --force --index-filter 'git rm -r --cached --ignore-unmatch results' --prune-empty --tag-name-filter cat -- --all

git reflog expire --expire=now --all; git gc --prune=now --aggressive

git push origin --force main